In 2025, I resolve to make my application environment more resilient

Endre Sara

January 16, 2025

What teams can do in 2025 to be proactive and predict changes they’ll need to make to their environment

Yesterday, we talked about being more proactive about the code our developers write, anticipating how code changes will impact our systems and infrastructure more broadly. Now, we turn our attention to planning ahead for external factors that could affect our systems.

Environmental changes are inevitable in any complex system. Whether it’s a sudden traffic spike, an unexpected hardware failure, or gradual infrastructure degradation, external pressures can quickly destabilize even the most carefully designed environments. The challenge lies in anticipating these changes before they disrupt services. In distributed systems, where small disruptions can ripple across dependencies, waiting until issues surface is simply not an option.

Proactive observability provides a necessary safeguard against these challenges. By identifying potential risks in real time, predicting future demand, and adapting resources dynamically, teams can maintain reliability even under extreme conditions. This isn’t about eliminating unpredictability; it’s about building systems that respond intelligently to it.

Challenges to being more proactive about environment changes

Three core challenges make proactive management of environmental changes difficult: predicting load trajectories, gaining forward-looking visibility into the broader system, and scaling resources efficiently. While various tools exist to address parts of these problems, they often fall short in providing the level of predictability, visibility, and adaptability that modern systems require.

Predicting future load is difficult

Predicting how traffic patterns will evolve is one of the most complex aspects of managing modern systems. Seasonal events, marketing campaigns, or even unforeseen surges can create spikes in demand that exceed system capacity.

When managing an increased number of users or handling heavier loads, developers must evaluate how the system handles scaling demands. This involves monitoring how many API calls are made to backend services for each user request and modeling the anticipated growth in API usage based on projected user activity. Monitoring tools can provide real-time data to understand current usage patterns, but they cannot predict the behavior of dependent services. Load-testing tools like Grafana Labs' k6 and Apache JMeter are commonly used during development to simulate expected traffic patterns. These tools are useful for pre-deployment stress testing but are static by design—they can’t account for real-time changes in traffic behavior or external variables like regional events or user behavior shifts. As a result, engineers often find themselves underprepared, scrambling to adjust capacity once demand has already outpaced infrastructure.

Forward-looking visibility into evolving systems is limited

Proactively managing environmental changes requires visibility not just into what’s happening now but into how the system is likely to evolve. For instance, if a service is handling a steadily increasing workload, engineers need to predict how soon it will hit a performance bottleneck and which dependent services may be affected.

For systems using messaging architectures, such as event-driven designs, it’s crucial to evaluate the rate at which messages are published and consumed. Developers need to assess whether consumers can process messages fast enough to keep up with the increased production rate. This may involve checking the throughput of message queues (e.g., Kafka, RabbitMQ) and ensuring sufficient worker threads or instances are available to process the messages without creating backlogs.

Database performance is another critical factor. As the number of user requests grows, so does the frequency of database queries. Developers must analyze the types of queries executed, the tables affected, and whether the database schema or indexes need optimization. Load testing can simulate increased query volume to predict the impact on database performance and resource usage. This analysis helps in determining whether the existing database can handle the load with vertical or horizontal scaling or if architectural changes, such as database sharding or read replicas, are necessary.

Current tools often provide snapshots of real-time metrics but lack the ability to project those metrics forward into the future.

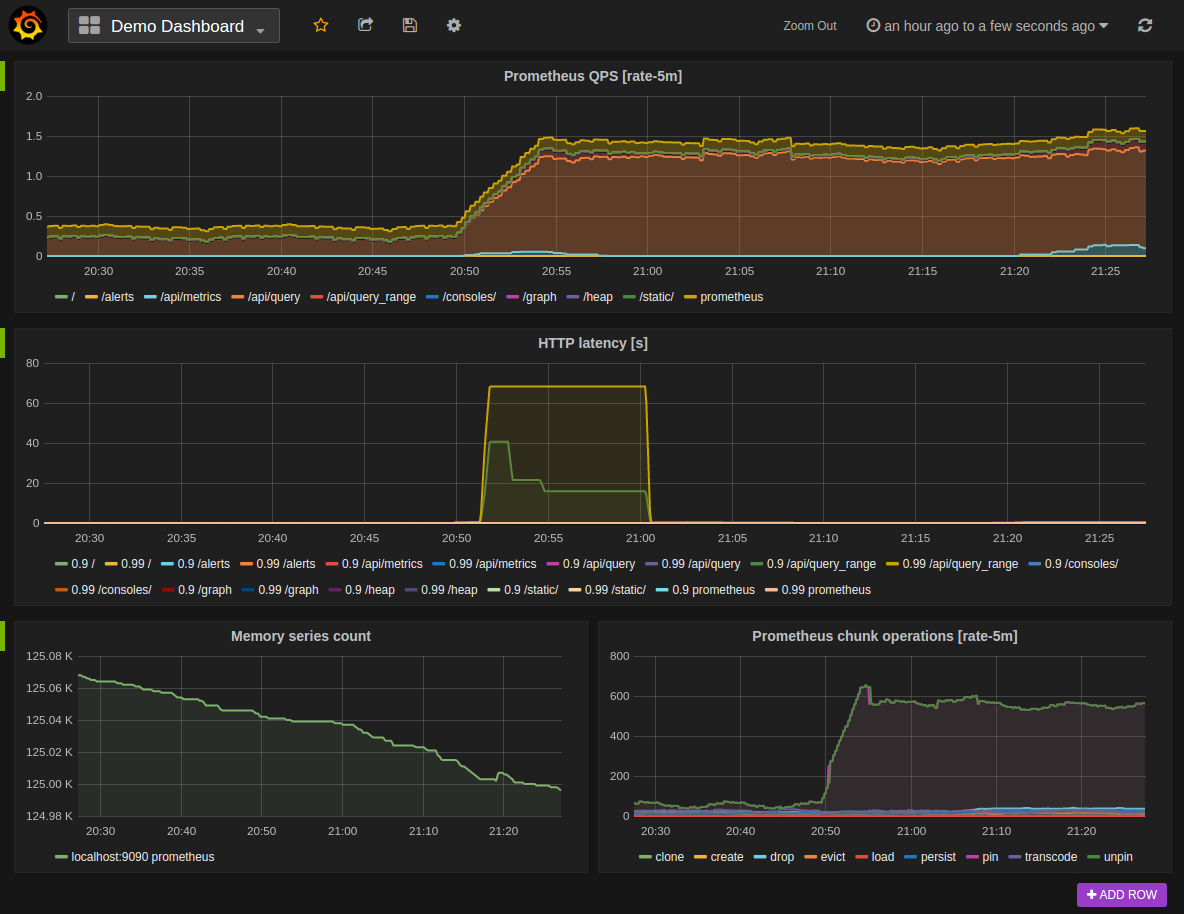

Datadog and New Relic offer dashboards that display system dependencies and performance under current conditions, but they focus on reactive analysis. Similarly, Prometheus provides excellent real-time monitoring for resource metrics like CPU or memory utilization but does not include predictive capabilities for identifying future stress points.

This lack of forward-looking insight forces engineers into a reactive posture, leaving them vulnerable to issues that could have been anticipated and avoided.

Scaling resource efficiency is complicated

Scaling infrastructure to meet demand is both a reliability and cost challenge. Reactive scaling, where systems respond only after resource thresholds are exceeded, risks under-provisioning during critical moments, leading to downtime. On the other hand, over-provisioning wastes resources and drives up operational costs.

Tools like Kubernetes Horizontal Pod Autoscaler (HPA) and AWS Auto Scaling automate scaling based on resource metrics like CPU or memory utilization. While these tools are helpful, they are fundamentally reactive—they adjust only after usage patterns have shifted. They lack the predictive capabilities to anticipate future demand and scale resources proactively, leaving teams vulnerable to sudden surges or inefficiencies.

What we require to be more proactive

Proactively managing environmental changes requires observability systems that go beyond traditional monitoring and alerting. These systems must not only detect anomalies in real time but also provide predictive insights and adaptive workflows that empower teams to anticipate and mitigate risks before they impact users. Here are the critical capabilities needed to achieve this:

- Real-time anomaly detection at scale. Identifying traffic surges, resource bottlenecks, or unexpected failures as they occur. Systems should process vast amounts of data with minimal latency, identifying deviations from normal patterns. Early detection prevents small issues from spiraling into widespread outages.

- Predictive analytics for load and capacity needs. Beyond real-time data, teams also need analytics that use historical and live data to forecast future system behavior. These systems should forecast where and when additional capacity will be needed, including across long-term patterns such as seasonal demand spikes. Predictive insights allow teams to allocate resources proactively.

- Integrated resource management. Armed with data about what to do, systems should be deeply integrated so that teams can act quickly on their predictive knowledge and act.

- Comprehensive system modeling. Teams need the ability to test the impact of environmental changes before they happen. This includes simulating traffic surges, dependency failures, or configuration changes in a controlled environment to identify potential risks and validate resilience.

- Clear, actionable insight. Systems must provide insights that are not only detailed but also actionable. This includes translating complex system behavior into clear recommendations that engineers and decision-makers can act on quickly. Think context-rich alerts and dashboards that highlight the root cause of anomalies, the potential impact of changes, and the recommended actions to mitigate it all.

Achieving true proactive observability requires more than just reacting to anomalies. It demands systems that predict, adapt, and empower teams to address environmental changes before they become critical. With these capabilities in place, organizations can ensure their systems remain stable and reliable, no matter what external pressures arise.

Causely delivers a proactive observability system

Our Causal Reasoning Platform is a model-driven, purpose-built AI system delivering multiple analytics built on a common data model. You can learn more about its capabilities that uniquely help teams proactively anticipate and plan for environment changes here.

Conclusion

Environmental changes like traffic spikes or hardware degradation are inevitable, but their impact doesn’t have to mean downtime. Reacting to problems after they occur leaves teams in firefighting mode, scrambling to restore stability. The key to reliability isn’t just responding quickly—it’s anticipating risks and adapting before issues arise.

Proactive observability makes this possible. With real-time anomaly detection, predictive analytics, and adaptive workflows, teams can prevent disruptions and maintain stability under changing conditions. By adopting tools and practices that prioritize foresight over reaction, engineers can shift from firefighting to proactive system management, ensuring their systems remain reliable no matter what comes next.