Explainability: The Black Box Dilemma in the Real World

Causely

August 7, 2024

The software industry is at a crossroads. I believe those who embrace explainability as a key part of their strategy will emerge as leaders. Those who resist will risk losing customer confidence and market share. The time for obfuscation is over. The era of explainability has begun.

What Is The Black Box Dilemma?

Imagine a masterful illusionist, their acts so breathtakingly deceptive that the secrets behind them remain utterly concealed. Today’s software is much the same. We marvel at its abilities to converse, diagnose, drive, and defend, yet the inner workings often remain shrouded in mystery. This is often referred to as the “black box” problem.

The recent CrowdStrike incident is also a stark reminder of the risks of this opacity. A simple software update, intended to enhance security, inadvertently caused widespread system crashes. It’s as if the magician’s assistant accidentally dropped the secret prop, revealing the illusion for what it truly was – an error prone process with no resilience. Had organizations understood the intricacies of CrowdStrike’s software release process, they might have been better equipped to mitigate risks and prevent the disruptions that followed.

This incident, coupled with the rapid advancements in AI, underscores the critical importance of explainability. Understanding the entire lifecycle of software – from conception to operation – is no longer optional but imperative. It is the cornerstone of trust, a shield against catastrophic failures, and an important foundation for accountability.

As our world becomes increasingly reliant on complex systems, understanding their inner workings is no longer a luxury but a necessity. Explainability acts as a key to unlocking the black box, revealing the logic and reasoning behind complex outputs. By shedding light on the decision-making processes of software, AI, and other sophisticated systems, we foster trust, accountability, and a deeper comprehension of their impact.

The Path Forward: Cultivating Explainability in Software

Achieving explainability demands a comprehensive approach that addresses several critical dimensions.

- Software Centric Reasoning and Ethical Considerations: Can the system’s decision-making process be transparently articulated, justified, and aligned with ethical principles? Explainability is essential for building trust and ensuring that systems used to support decision making operate fairly and responsibly.

- Effective Communication and User Experience: Is the system able to communicate its reasoning clearly and understandably to both technical and non-technical audiences? Effective communication enhances collaboration, knowledge sharing, and user satisfaction by empowering users to make informed decisions.

- Robust Data Privacy and Security: How can sensitive information be protected while preserving the transparency necessary for explainability? Rigorous data handling and protection are crucial for safeguarding privacy and maintaining trust in the system.

- Regulatory Compliance and Continuous Improvement: Can the system effectively demonstrate adherence to relevant regulations and industry standards for explainability? Explainability is a dynamic process requiring ongoing evaluation, refinement, and adaptation to stay aligned with the evolving regulatory landscape.

By prioritizing these interconnected elements, software vendors and engineering teams can create solutions where explainability is not merely a feature, but a cornerstone of trust, reliability, and competitive advantage.

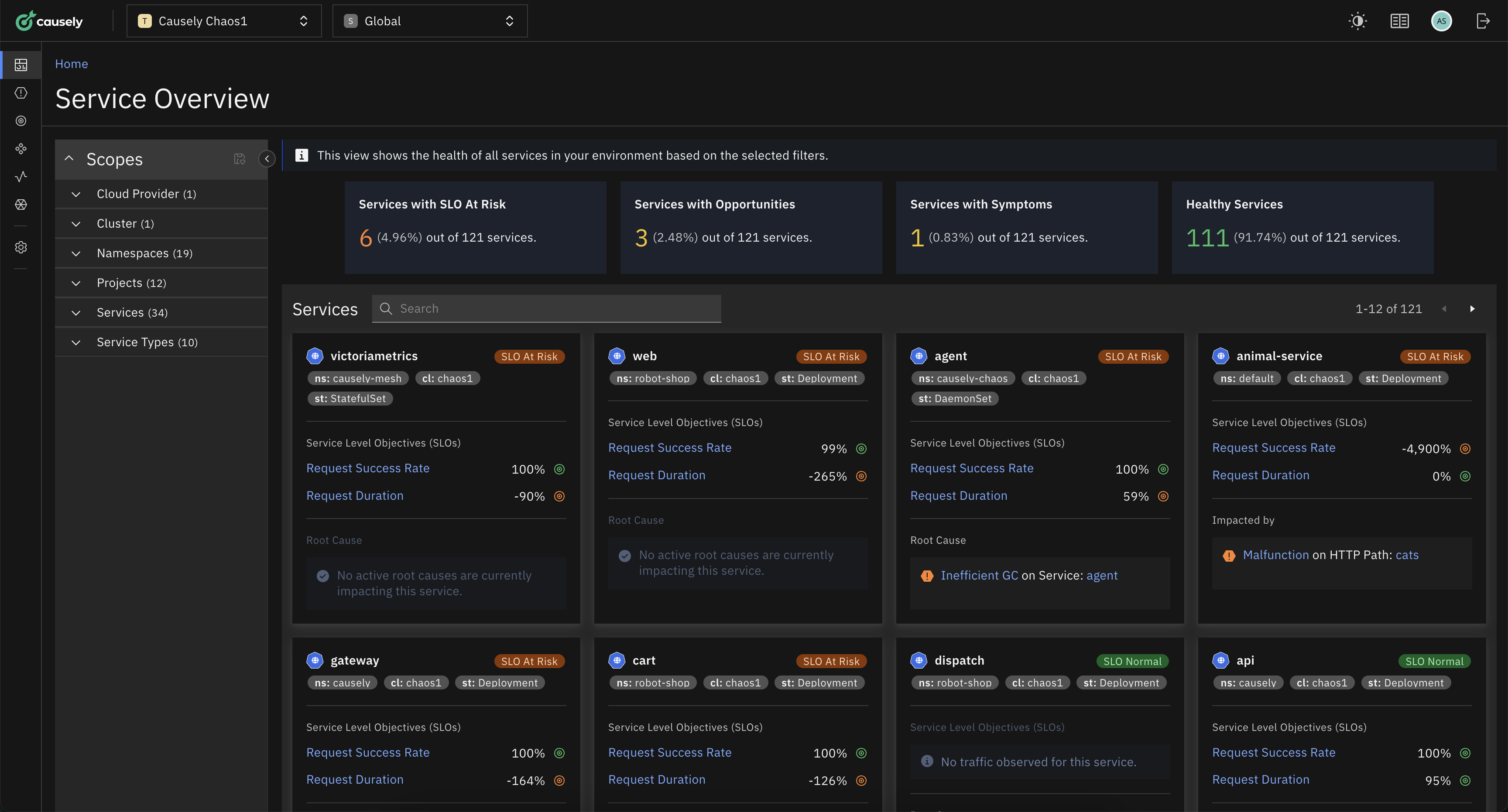

An Example of Explainability in Action with Causely

Causely is a pioneer in applying causal reasoning to revolutionize cloud-native application reliability. Our platform empowers operations teams to rapidly identify and resolve the root causes of service disruptions, preventing issues before they impact business processes and customers. The enables dramatic reductions in Mean Time to Repair (MTTR), minimizing business disruptions and safeguarding customer experiences.

Causely also uses its Causal Reasoning Platform to manage its SaaS offering, detecting and responding to service disruptions, and ensuring swift resolution with minimal impact. You can learn more about this in Endre Sara‘s article “Eating Our Own Dogfood: Causely’s Journey with OpenTelemetry & Causal AI”.

Causal Reasoning, often referred to as Causal AI, is a specialized field in computer science dedicated to uncovering the underlying cause-and-effect relationships within complex data. As the foundation of explainable AI, it surpasses the limitations of traditional statistical methods, which frequently produce misleading correlations.

Unlike opaque black-box models, causal reasoning illuminates the precise mechanisms driving outcomes, providing transparent and actionable insights. When understanding the why behind an event is equally important as predicting the what, causal reasoning offers superior clarity and reliability.

By understanding causation, we transcend mere observation to gain predictive and interventional power over complex systems.

Causely is built on a deep-rooted foundation of causal reasoning. The founding team, pioneers in applying this science to IT operations, led the charge at System Management Arts (SMARTS). SMARTS revolutionized root cause analysis for distributed systems and networks, empowering more than 1500 global enterprises and service providers to ensure the reliability of mission-critical IT services. Their groundbreaking work earned industry accolades and solidified SMARTS as a leader in the field.

Explainability is a cornerstone of the Causal Reasoning Platform from Causely. The company is committed to transparently communicating how its software arrives at its conclusions, encompassing both the underlying mechanisms used in Causal Reasoning and the practical application within organizations operational workflows.

Explainable Operations: Enhancing Workflow Efficiency for Continuous Application Reliability

Causely converts raw observability data into actionable insights by pinpointing root causes and their cascading effects within complex application and infrastructure environments.

Today incident response is a complex, resource-intensive process of triage and troubleshooting that often diverts critical teams from strategic initiatives. This reactive approach hampers innovation, erodes efficiency, and can lead to substantial financial losses and reputational damage, when application reliability is not continuously assured.

The complex interconnected nature of cloud-native environments magnifies the effect/impact, leading to cascading disruptions, which can propagate across services when Root Cause problems occur.

By automating the identification and explanation of cause-and-effect relationships, Causely accelerates incident response. Relevant teams responsible for root cause problems receive immediate alerts, complete with detailed explanations of the cause & effect, empowering them to prioritize remediation based on impact. Simultaneously, teams whose services are impacted gain insights into the root causes and who is responsible for resolution, enabling proactive risk mitigation without the need for extensive troubleshooting.

For certain types of Root Cause problems it may also be possible to automate the remediation when they occur without human intervention.

By maintaining historical records of the cause and effect of past Root Cause problems and identifying recurring patterns, Causely enables reliability engineering teams to anticipate future potential problems and implement targeted mitigation strategies.

Causely’s ability to explain the effect of potential degradations and failures, before they even happen through “what if” analysis, also empowers reliability engineering teams to identify single points of failure, changes in load patterns and assess the impact of planned changes on related applications, business processes, and customers.

The result? Through explainability organizations can dramatically reduce MTTR, improve business continuity, and increase cycles for development and innovation. Causely turns reactive troubleshooting into proactive prevention, ensuring application reliability can be continuously assured. This short video tells the story.

Unveiling Causely: How Our Platform Delivers Actionable Insights

Given the historical challenges and elusive nature of automating root cause analysis in IT operations, customer skepticism is warranted in this field. This problem has long been considered the “holy grail” of the industry, with countless vendors falling short of delivering a viable solution.

As a consequence Causely has found that it is important to prioritize transparency and explainability around how our Causal Reasoning Platform works and produces the results described earlier.

Much has been written about this — learn more here.

This is an approach which is grounded in sound scientific principles, making it both effective and comprehensible.

Beyond understanding how the platform works, customers also value transparency around data handling. In this regard our approach to data management offers unique benefits in terms of data privacy and data management cost savings. You can learn more about this here.

In Summary

Explainability is the cornerstone of Causely’s mission. As they advance their technology, their dedication to transparency and understanding will only grow stronger. Don’t hesitate to visit the website or reach out to me or members of the Causely team to learn more about the approach and to experience Causely firsthand.