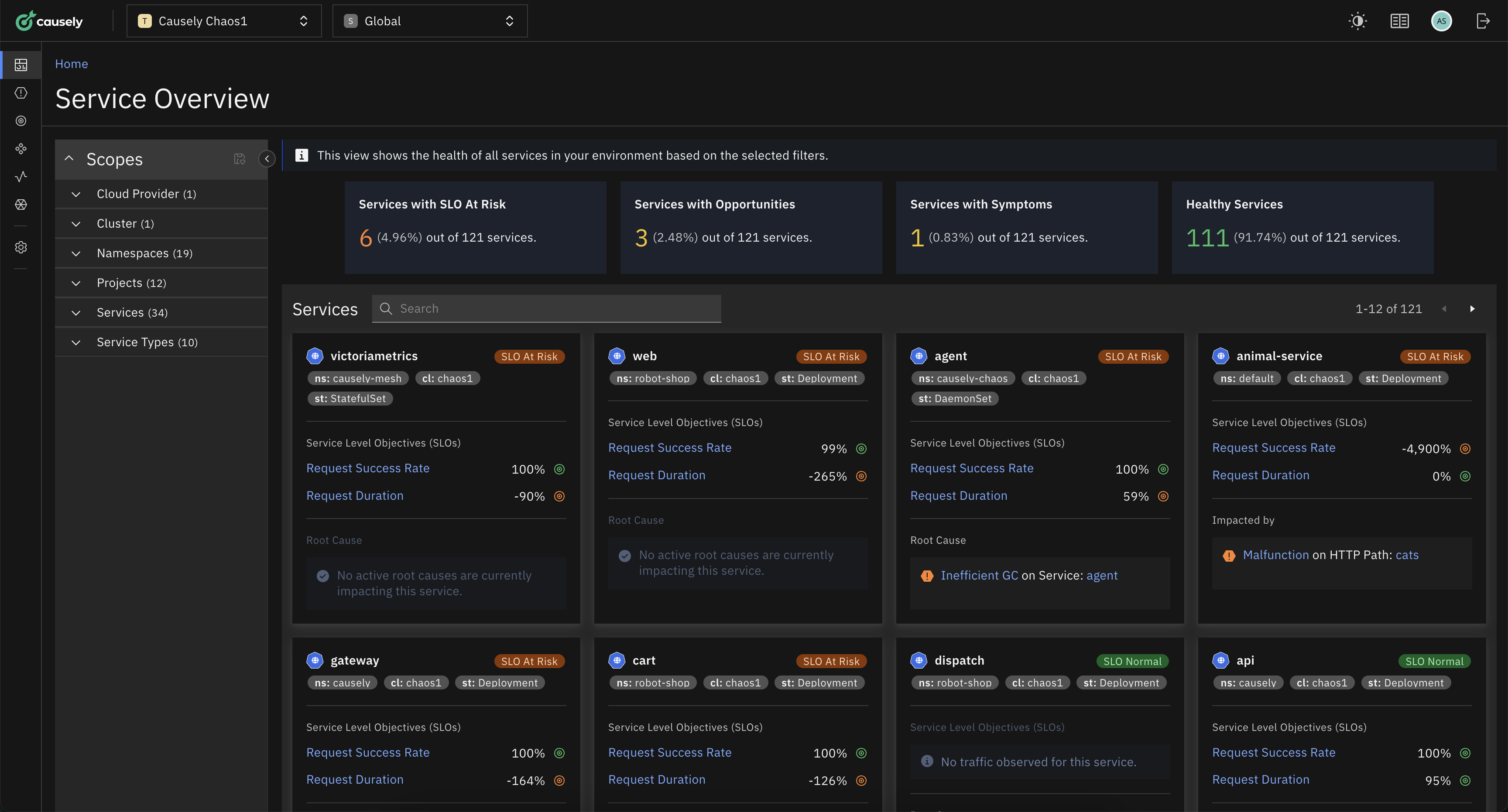

One million ways to slow down your application response time and throughput

Causely

June 12, 2023

This blog was originally posted on LinkedIn.

Navigating the Perilous Waters of Misconfigured MaxOpenConnection in Postgres Applications

Welcome to the inaugural post in our series, “One Million Ways to Slow Down Your Application Response Time and Throughput”. In this series, we will delve into the myriad of factors that, if neglected, can throw a wrench in the smooth operation of your applications.

Today, we bring to focus a common yet often under-appreciated aspect related to database configuration and performance tuning in PostgreSQL, affectionately known as Postgres. Although Postgres is renowned for its robustness and flexibility, it isn’t exempt from performance downturns if not properly configured. Our focus today shines on the critical yet frequently mismanaged parameter known as MaxOpenConnection.

Misconfiguration of this parameter can lead to skyrocketing response times and plummeting throughput, thereby negatively influencing your application’s performance and overall user experience. This lesson, as you’ll see, was born from our first hand experience.

How much you learnt from mistakes

The Awakening: From Error to Enlightenment

Our journey into understanding the critical role of the MaxOpenConnection parameter in Postgres performance tuning started with a blunder during the development of our Golang application. We employ Gorm to establish a connection to a Postgres database in our application. However, in the initial stages, we overlooked the importance of setting the maximum number of open connections with SetMaxOpenConns, a lapse that rapidly manifested its consequences.

Our API requests, heavily reliant on database interactions, experienced significant slowdowns. Our application was reduced to handling a scanty three Requests Per Second (RPS), resulting in a bottleneck that severely undermined the user experience.

This dismal performance prompted an extensive review of our code and configurations. The cause? Our connection configuration with the Postgres database. We realized that, by not setting a cap on the number of open connections, we were unwittingly allowing an unlimited number of connections, thereby overwhelming our database and causing significant delays in response times.

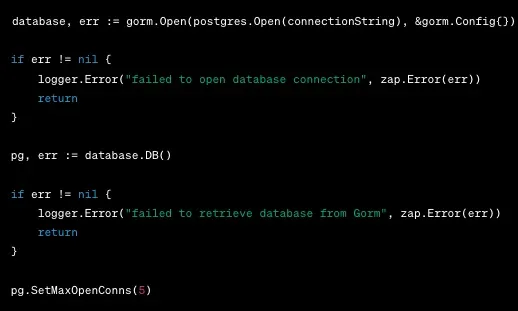

Quick to rectify our error, we amended our Golang code to incorporate the SetMaxOpenConns function, limiting the maximum open connections to five. Here’s the modified code snippet:

Code snippet with SetMaxOpenConns

The difference was monumental. With the same load test, our application’s performance surged, with our RPS amplifying by a remarkable 100 times. This situation underscored the significance of correctly configuring database connection parameters, specifically the MaxOpenConnection parameter.

The MaxOpenConnection Parameter: A Client-Side Perspective

When discussing connection management in a PostgreSQL context, it’s essential to distinguish between client-side and server-side configurations. While Postgres has a server-side parameter known as max_connections, our focus here lies on the client-side control, specifically within our application written in Golang using the GORM library for database operations.

From the client-side perspective, “MaxOpenConnection” is the maximum number of open connections the database driver can maintain for your application. In Golang’s database/SQL package, this is managed using the SetMaxOpenConns function. This function sets a limit on the maximum number of open connections to the database, curtailing the number of concurrent connections the client can establish.

If left un-configured, the client can attempt to open an unlimited number of connections, leading to significant performance bottlenecks, heightened latency, and reduced throughput in your application. Thus, appropriately managing the maximum number of open connections on the client-side is critical for performance optimization.

The Price of Neglecting SetMaxOpenConns

Overlooking the SetMaxOpenConns parameter can severely degrade Postgres database performance. When this parameter isn’t set, Golang’s database/SQL package doesn’t restrict the number of open connections to the database, allowing the client to open a surplus of connections. While each individual connection may seem lightweight, collectively, they can place a significant strain on the database server, leading to:

- Resource Exhaustion: Each database connection consumes resources such as memory and CPU. When there are too many connections, the database may exhaust these resources, leaving fewer available for executing actual queries. This can undermine your database’s overall performance.

- Increased Contention: Too many open connections, all vying for the database’s resources (like locks, memory buffers, etc.), result in increased contention. Each connection might have to wait its turn to access the resources it needs, leading to an overall slowdown.

- Increased I/O Operations: More open connections equate to more concurrent queries, which can lead to increased disk I/O operations. If the I/O subsystem can’t keep pace, this can slow down database operations.

Best Practices for Setting Max Open Connections to Optimize Postgres Performance

Establishing an optimal number for maximum open connections requires careful balance, heavily dependent on your specific application needs and your database server’s capacity. Here are some best practices to consider when setting this crucial parameter:

- Connection Pooling: Implementing a connection pool can help maintain a cache of database connections, eliminating the overhead of opening and closing connections for each transaction. The connection pool can be configured to have a maximum number of connections, thus preventing resource exhaustion.

- Tune Max Connections: The maximum number of connections should be carefully calibrated. It’s influenced by your application’s needs, your database’s capacity, and your system’s resources. Setting the number too high or too low can impede performance. The optimal max connections value strikes a balance between the maximum concurrent requests your application needs to serve and the resource limit your database can handle.

- Monitor and Optimize: Keep a constant eye on your database performance and resource utilization. If you observe a high rate of connection errors or if your database is using too many resources, you may need to optimize your settings.

Signing Off

Our experience highlights the importance of correct configuration when interfacing your application with a Postgres database, specifically parameters like MaxOpenConns. These parameters are not just trivial settings; they play a crucial role in defining the performance of both your application and the database.

Ignoring these parameters is akin to driving a car without brakes. By comprehending the implications of each setting and configuring them accordingly, you can stave off unnecessary performance bottlenecks and deliver a smoother, faster user experience. It’s not merely about making your application work – it’s about ensuring it functions efficiently and effectively.

To conclude, it’s crucial to understand that there is no universally applicable method to set up database connections. It’s not merely about setting thresholds for monitoring purposes, as this often leads to more disturbance than usefulness. The critical aspect to keep an eye on is potential misuse of the database connection by a client, leading to adverse effects on the database and its other clients. This becomes especially complex when dealing with shared databases, as the “noisy neighbor” phenomenon can exacerbate problems if an application isn’t correctly set up. Each application has distinct needs and behaviors, thus requiring a carefully thought-out, bespoke configuration to guarantee maximum efficiency.

Bonus

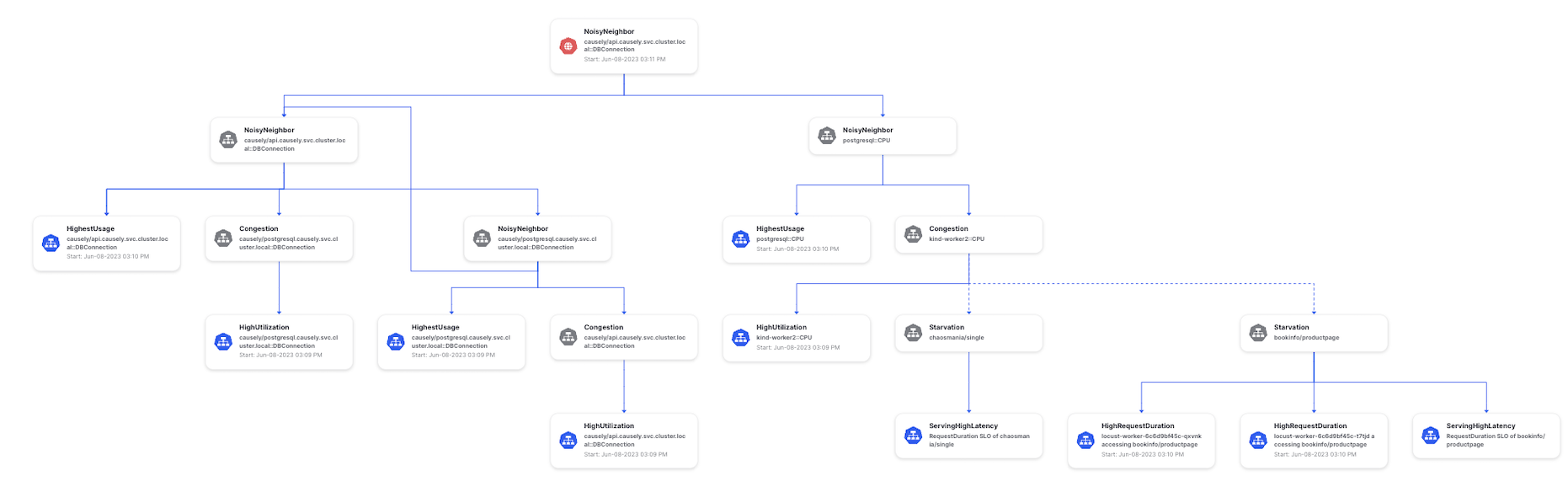

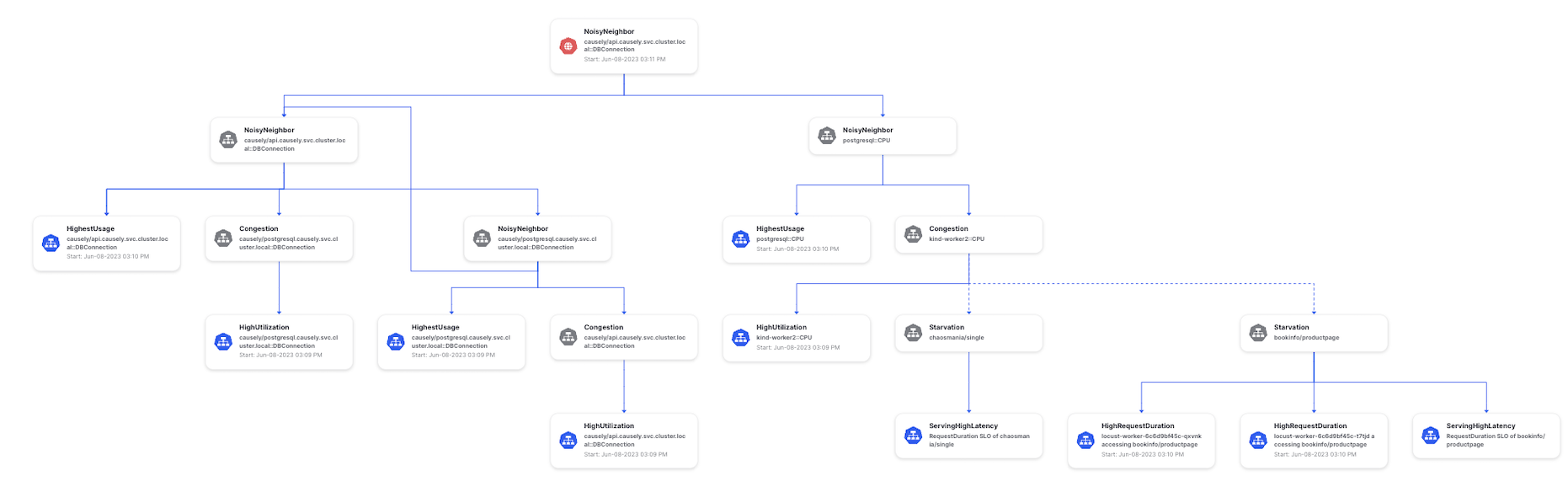

Curious about the potential symptoms caused by a noisy application on a database connection? Take a look at the causality view presented by Causely:

Application: Database Connection Noisy Neighbor causing service and infrastructure symptoms

According to the causality diagram, the application noisy neighbor of the database connection leads to increased CPU usage in the Postgres container. Consequently, the Postgres container becomes the noisy neighbor of the CPU on the specific Kind node where it runs on. This elevated CPU utilization on the Kind node directly results in higher service latency for clients attempting to access the service provided by the pods residing on the same node. Therefore, addressing each issue individually by merely allocating more resources equates to applying a temporary fix rather than a sustainable solution.

Learn more

- Read the blog: DevOps may have cheated death, but do we all need to work for the king of the underworld?

- Learn more about our causal AI platform for IT

- See Causely in action: Request a demo