Unveiling the Causal Revolution in Observability

Causely

December 6, 2023

Reposted with permission from LinkedIn.

OpenTelemetry and the Path to Understanding Complex Systems

Decades ago, the IETF’s (Internet Engineering Task Force) developed an innovative protocol, SNMP, revolutionizing network management. This standardization spurred a surge of innovation, fostering a new software vendor landscape dedicated to streamlining operational processes in network management, encompassing Fault, Configurations, Accounting, Performance, and Security (FCAPS). Today, SNMP reigns as the world’s most widely adopted network management protocol.

On the cusp of a similar revolution stands the realm of application management. For years, the absence of management standards compelled vendors to develop proprietary telemetry for application instrumentation, to enable manageability. Many of the vendors also built applications to report on and visualize managed environments, in an attempt to streamline the processes of incident and performance management.

OpenTelemetry‘s emergence is poised to transform the application management market dynamics in a similar way by commoditizing application telemetry instrumentation, collection, and export methods. Consequently, numerous open-source projects and new companies are emerging, building applications that add value around OpenTelemetry.

This evolution is also compelling established vendors to embrace OpenTelemetry. Their futures hinge on their ability to add value around this technology, rather than solely providing innovative methods for application instrumentation.

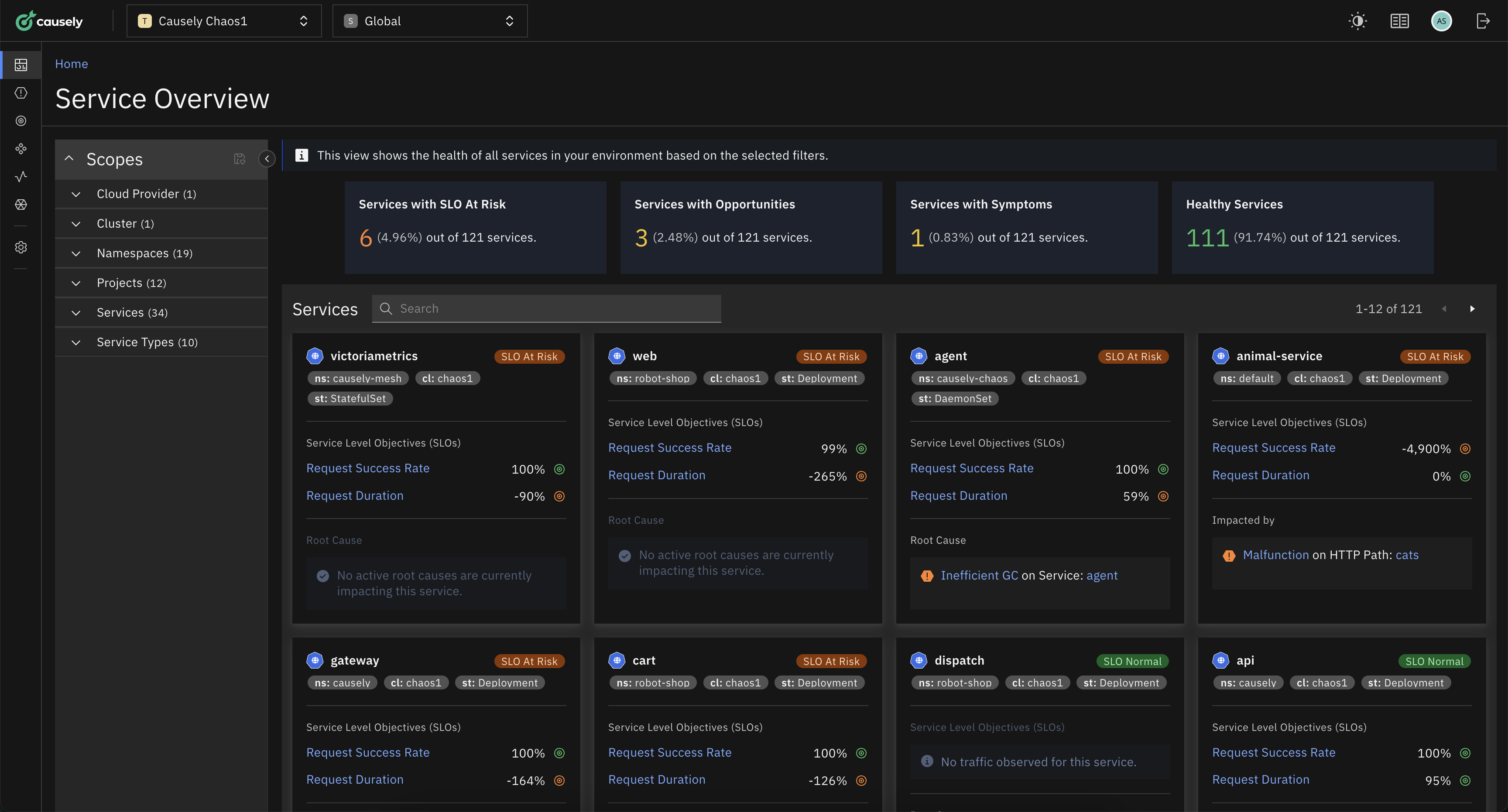

Adding Value Above OpenTelemetry

While OpenTelemetry simplifies the process of collecting and exporting telemetry data, it doesn’t guarantee the ability to pinpoint the root cause of issues. This is because understanding the causal relationships between events and metrics requires more sophisticated analysis techniques.

Common approaches to analyzing OpenTelemetry data that get devops teams closer to this goal include:

- Visualization and Dashboards: Creating effective visualizations and dashboards is crucial for extracting insights from telemetry data. These visualizations should present data in a clear and concise manner, highlighting trends, anomalies, and relationships between metrics.

- Correlation and Aggregation: To correlate logs, metrics, and traces, you need to establish relationships between these data streams. This can be done using techniques like correlation IDs or trace identifiers, which can be embedded in logs and metrics to link them to their corresponding traces.

- Pattern Recognition and Anomaly Detection: Once you have correlated data, you can apply pattern recognition algorithms to identify anomalies or outliers in metrics, which could indicate potential issues. Anomaly detection tools can also help identify sudden spikes or drops in metrics that might indicate performance bottlenecks or errors.

- Machine Learning and AI: Machine learning and AI techniques can be employed to analyze telemetry data and identify patterns, correlations, and anomalies that might be difficult to detect manually. These techniques can also be used to predict future performance or identify potential issues before they occur.

While all of these techniques might help to increase the efficiency of the troubleshooting process, human expertise is still essential for interpreting and understanding the results. This is because these approaches to analyzing telemetry data are based on correlation and lack an inherent understanding of cause and effect (causation).

Avoiding The Correlation Trap: Separating Coincidence from Cause and Effect

In the realm of analyzing observability data, correlation often takes center stage, highlighting the apparent relationship between two or more variables. However, correlation does not imply causation, a crucial distinction that software-driven causal analysis can effectively address and results in a better outcome in the following ways:

Operational Efficiency And Control: Correlation-based approaches often leave us grappling with the question of “why,” hindering our ability to pinpoint the root cause of issues. This can lead to inefficient troubleshooting efforts, involving multiple teams in a devops environment as they attempt to unravel the interconnectedness of service entities.

Software-based causal analysis empowers us to bypass this guessing game, directly identifying the root cause and enabling targeted corrective actions. This not only streamlines problem resolution but also empowers teams to proactively implement automations to mitigate future occurrences. It also frees up the time of experts in the devops organizations to focus on shipping features and working on business logic.

Consistency In Responding To Adverse Events: The speed and effectiveness of problem resolution often hinge on the expertise and availability of individuals, a variable factor that can delay critical interventions. Software-based causal analysis removes this human dependency, providing a consistent and standardized approach to root cause identification.

This consistency is particularly crucial in distributed devops environments, where multiple teams manage different components of the system. By leveraging software, organizations can ensure that regardless of the individuals involved, problems are tackled with the same level of precision and efficiency.

Predictive Capabilities And Risk Mitigation: Correlations provide limited insights into future behavior, making it challenging to anticipate and prevent potential problems. Software-based causal analysis, on the other hand, unlocks the power of predictive modeling, enabling organizations to proactively identify and address potential issues before they materialize.

This predictive capability becomes increasingly valuable in complex cloud-native environments, where the interplay of numerous microservices and data pipelines can lead to unforeseen disruptions. By understanding cause and effect relationships, organizations can proactively mitigate risks and enhance operational resilience.

Conclusion

OpenTelemetry marks a significant step towards standardized application management, laying a solid foundation for a more comprehensive understanding of complex systems. However, to truly unlock the full potential, the integration of software-driven causal analysis, also referred to as Causal AI, is essential. By transcending correlation, software-driven causal analysis empowers devops organizations to understand cause and effect of system behavior, enabling proactive problem detection, predictive maintenance, operational risk mitigation and automated remediation.

The founding team of Causely participated in the standards-driven transformation that took place in the network management market more than two decades ago at a company called SMARTS. The core of their solution was built on Causal AI. SMARTS became the market leader of Root Cause Analysis in networks and was acquired by EMC in 2005. The team’s rich experience in Causal AI is now being applied at Causely, to address the challenges of managing cloud native applications.

Causely’s embrace of OpenTelemetry stems from the recognition that this standardized approach will only accelerate the advancement of application management. By streamlining telemetry data collection, OpenTelemetry creates a fertile ground for Causal AI to flourish.

If you are intrigued and would like to learn more about Causal AI the team at Causely would love to hear from you, so don’t hesitate to get in touch.